Blog

Secure xDS Server Communication with Contour v0.14

Jul 23, 2019

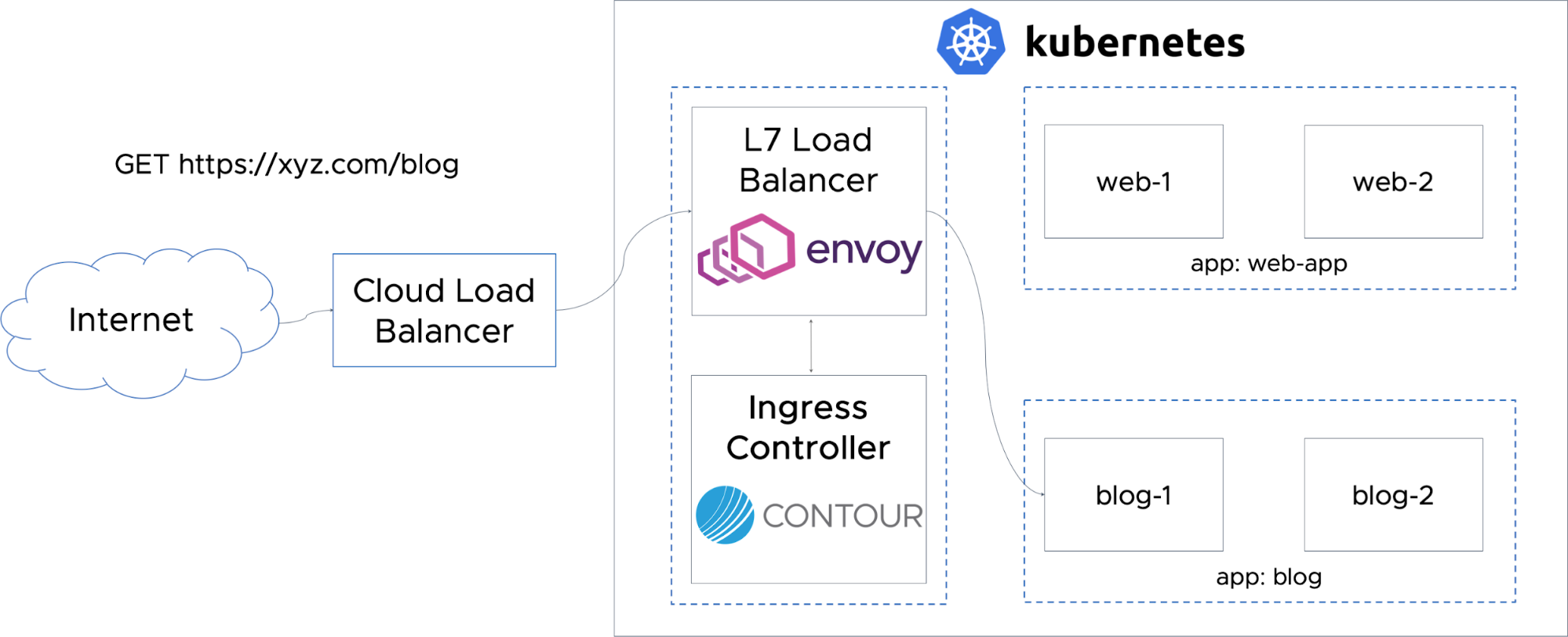

Contour is an Ingress controller for Kubernetes that works by deploying the Envoy proxy as a reverse proxy and load balancer. Contour supports dynamic configuration updates out of the box while maintaining a lightweight profile.

There are a few different models that you can implement when you deploy Contour to a Kubernetes cluster. Up until the latest Contour release, v0.14, we’ve typically used the co-located model, which places Contour and Envoy in the same pod communicating over localhost.

However, there are many use cases where this deployment paradigm is less desired. With v0.14, a more secure split deployment model has been added. This style separates Contour’s deployment from Envoy so that they can have different life cycles.

Contour’s split model offers the following benefits for users:

- Contour and Envoy life cycles can be managed independently

- Less load on the Kubernetes API server

- Secure communication between the Contour xDS server and Envoy

Contour’s Architecture

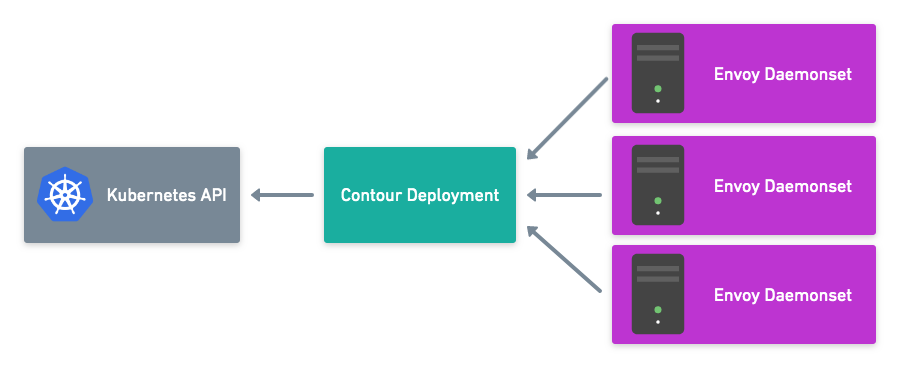

Contour provides the management server for Envoy by implementing an xDS server. Envoy connects to the Contour xDS server over gRPC and requests configuration items, such as clusters, endpoints, and routes to configure itself. Contour integrates with the Kubernetes API server and looks for services, endpoints, secrets, Kubernetes Ingress resources, and Contour IngressRoute objects. When a change to any of these happen, Contour rebuilds a set of configurations for Envoy to consume through the xDS server.

Secure Split Deployment Model

Until Contour release v0.14, the deployment model placed Contour and Envoy in the same pod, so gRPC communication occurred over localhost. This approach was convenient because Contour was deployed in a single service. However, as you scale out Contour in this model, Contour and Envoy scale together. Each instance of Contour adds a watch on the Kubernetes API server for the objects it acts on, adding load to the server.

The split model allows Contour and Envoy to scale independently. If a new version of Contour is released, you can now upgrade to the new version without having to restart each instance of Envoy in your cluster.

A key new feature in Contour v0.14 is that we have secured the communication between Contour and Envoy over the xDS API connection utilizing mutually checked self-signed certificates. There are three ways to generate certificates to secure this connection. The Contour repo includes step-by-step examples of how to generate certificates from a command line if you want to generate them by hand; the example/contour example includes a job which automatically generate the certificates, or you could provide your own based on your IT security requirements.

More new features in Contour v0.14

Version 0.14 also adds better support for deploying Envoy with various hostnames. Envoy routes traffic at the L7 or HTTP routing level. Previous versions of Contour required requests to be sent over Port 80 or Port 443. Now Contour configures Envoy to route requests without this requirement, allowing for easier deployments within your local laptop or network infrastructure.

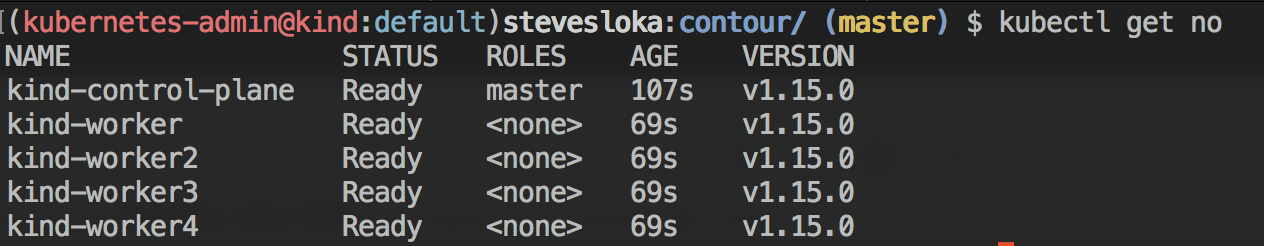

We recently wrote a blog post walking through how to deploy Contour to kind, which is a tool for creating Kubernetes clusters on your local development machine: Kind-ly running Contour.

Future Plans

The Contour project is very community driven and the team would love to hear your feedback! Many features (including IngressRoute) were driven by users who needed a better way to solve their problems. We’re working hard to add features to Contour, especially in expanding how we approach routing. Please look out for design documents for the new IngressRoute/v1 routing design which will be a large discussion topic for our next community meeting!

If you are interested in contributing, a great place to start is to comment on one of the issues labeled with Help Wanted and work with the team on how to resolve them.

We’re immensely grateful for all the community contributions that help make Contour even better! For version v0.14, special thanks go out to:

Related Content

Kind-ly running Contour

This blog post demonstrates how to install kind, create a cluster, deploy Contour, and then deploy a sample application, all locally on your machine.

Routing Traffic to Applications in Kubernetes with Contour

One of the most critical needs in running workloads at scale with Kubernetes is efficient and smooth traffic ingress management at the Layer 7 level.

Twitter

Twitter Slack

Slack RSS

RSS GitHub

GitHub